Median Value for high ISO noise reduction in image stacks

The median value from a set of pixel values collected from an ordered list of image data can be used to successfully reduce noise in an image.

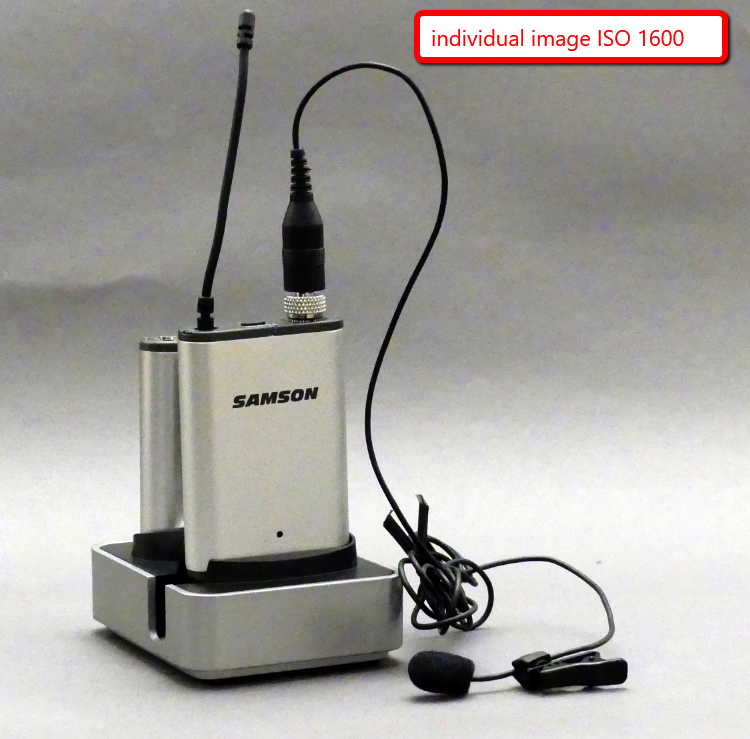

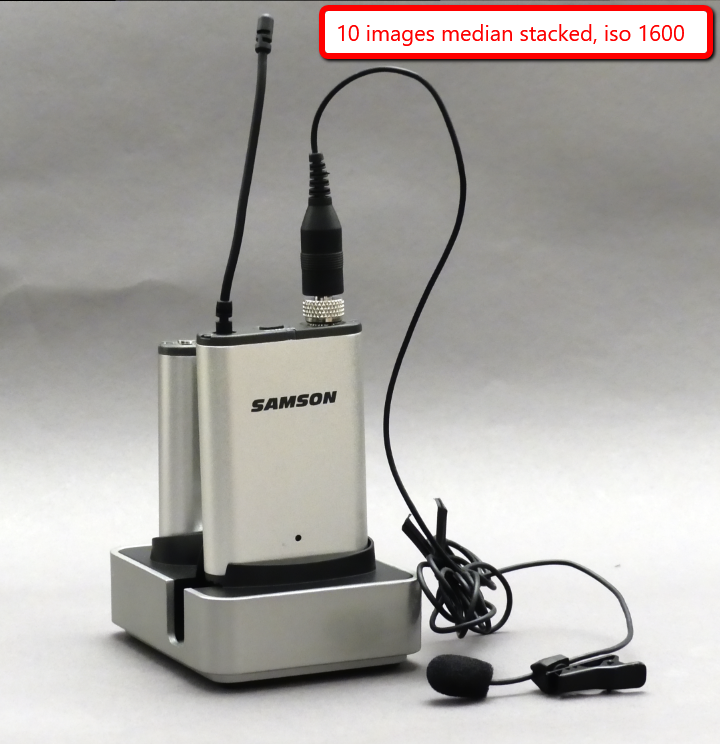

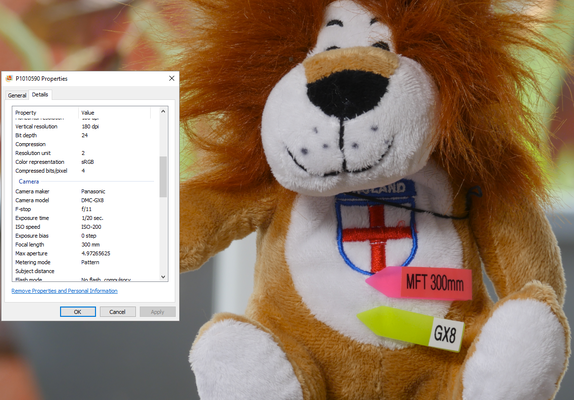

The two images show a 100% crop of a before and after comparison of the process.

As you can see the colour noise in the original image has been successfully removed leaving a much smoother noise free image.

The process is detailed below.

The process

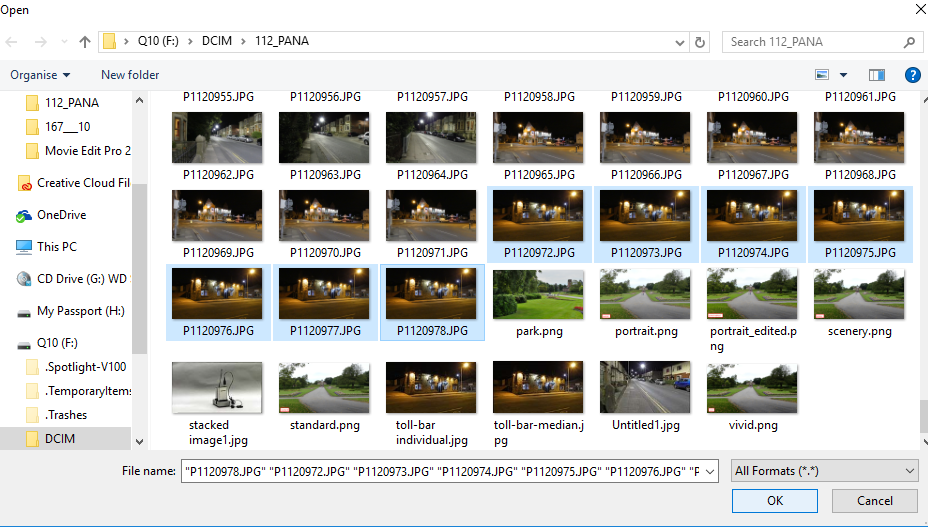

So the process begins with the import of the image group by the Script in Photoshop - Load Files Into Stack

Select the files by using the Browse option

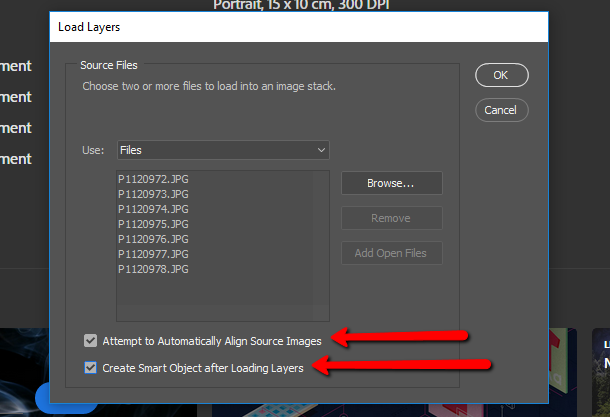

Place tick in the two boxes to auto align and create smart object.

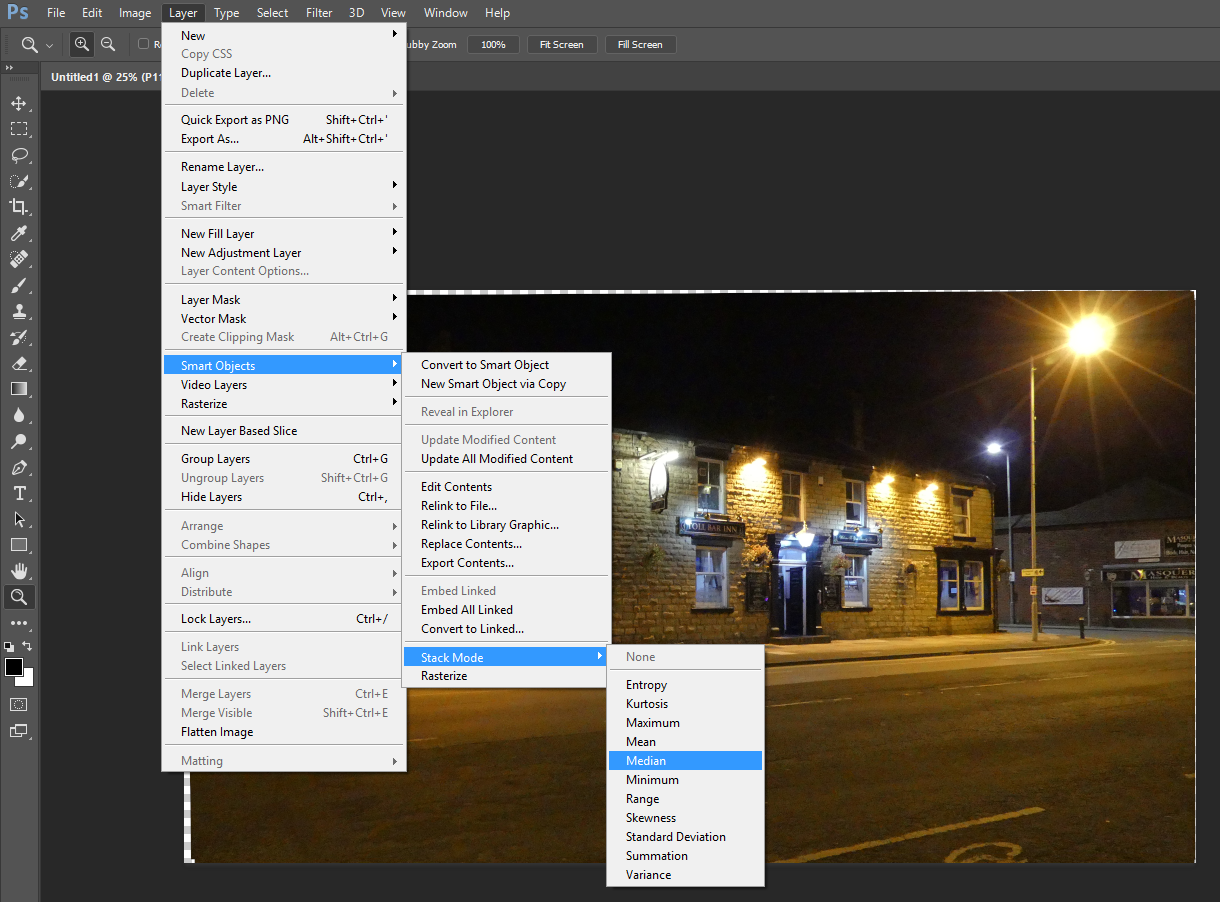

Once the images have been loaded into Photoshop select Layers, Smart Object, Stack Mode and MEDIAN.

The final image can be sharpened using your favourite option

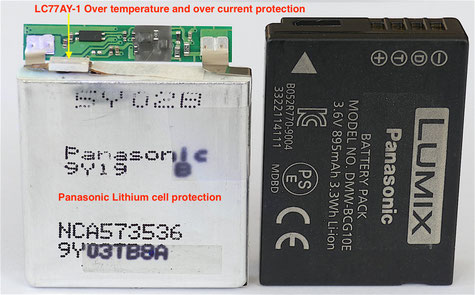

Lithium-ion batteries, cell protection features

There is, in my opinion, quite a lot of mis-guided information regarding the safety of lithium-ion batteries. News of bursting batteries, venting flames and explosions are always making news headlines. However, most of these are of the heavy duty cells fitted in the likes of hover boards and other high current drain devices. In cameras and mobile phones if you consider the worldwide total for devices in circulation versus the reported failures then this is a very, very small percentage. Never the less it is wise to ensure that the batteries used do have some protection. All the camera batteries, even those in my collection from quite a number of years now, all have some protection circuitry built in. The 18650 and similar cells can be purchased without integral cell protection and extreme care must be exercised in charging and use of these cells to prevent user or equipment damage.

I've been dissembling quite a number of Panasonic batteries and a few purchased on Amazon to see how cell protection is implemented and whether the high premium price charged for OEM batteries is in fact justified. I've come across quite a few batteries which look from different manufacturers however they are the same cells and protection pcbs so I am guessing that they are manufactured by one company and screen printed to client requirements.

Here are some of my investigative results for these batteries.

The 3.6v single lithium cells all have the electronic fuse in series with the cell output. It interrupts the cell output if the surface temperature of the cell reaches 77 degrees centigrade.

This can be either charging or discharging. It also acts as a short circuit protection feature if the terminals are accidentally shorted, or the camera develops a fault which causes high currents to be drawn from the cell. It is an auto reset fuse, repairing itself when the temperature drops below the set level.

The 7.2 volt cells have two 3.6 v cells in series with mosfet circuits employed in the control of the charging and discharging. These appear to be controlled by a Microchip microprocessor however it is potted in resin so it is impossible to reverse engineer.

I am guessing but it is responsible for the variable states of charge applied to the cell during the charging phase.

These are pre-conditioning charged to allow a cell which has gone into deep discharge to be safely charged, through the normal charge level and finally allowing the cell voltage to rise to the cut off voltage at 100% fully charged. It also may report to the camera the % of power left via the Data terminal of the battery.

It too has the thermal/short circuit LC77AY-1 fuse in series with each cell

The first "generic" BLC12E cell that I opened was still rated at 1200mAh but the terminal voltage was 8.4 volts.

I guess this is due to the DC/DC converter which may allow the cell to discharge to a slightly lower level, but still above the safe minimum voltage thus giving the impression of a higher capacity.

It does have the usual battery management circuitry however it does not have the same thermal protection as the Panasonic cells.

The cell with the DSTE logo on it appears to have the same cell management circuit board and the same cells. However this cell is marked at 1700mAh and again has a terminal voltage of 8.4v

I haven't fully reverse engineered the control board yet as some of the IC chip identification has been removed but it could be that raising the terminal voltage has the same effect as the external power supply available for the BLC12 (the DMW ACC8) which also has a 8.4 volt terminal voltage.

Any less than this and the camera reports that "this battery cannot be used".

I guess because as there is no data communication between the cell and the camera that the 8.4 volts is used to differentiate a fully coded battery at 7.2volts and an external power supply at 8.4v.

The cell power management pcb which is installed in both third party BLC12E replacement batteries.

Lithium Battery Charging and maximising charge/discharge cycles

Lithium cells have may advantages over other types of battery chemistry. They don't have a memory effect like that experienced with Ni-Cd cells and hold a fairly constant terminal voltage during discharge. Charging has to be fairly well controlled to minimise the risk of thermal runaway and cell venting and flaming. The Lithium ion charger is a voltage-limiting device that has similarities to that of the lead acid system. The differences with Li-ion lie in a higher voltage per cell, tighter voltage tolerances and the absence of trickle or float charge at full charge.

While lead acid offers some flexibility in terms of voltage cut off, manufacturers of Li-ion cells are very strict on the correct setting because Li-ion cannot accept overcharge.

Li-ion cells having the traditional cathode materials of cobalt, nickel, manganese and aluminum typically charge to 4.20V per cell. The tolerance is +/–50mV per cell. Some nickel-based varieties charge to 4.10V per cell.

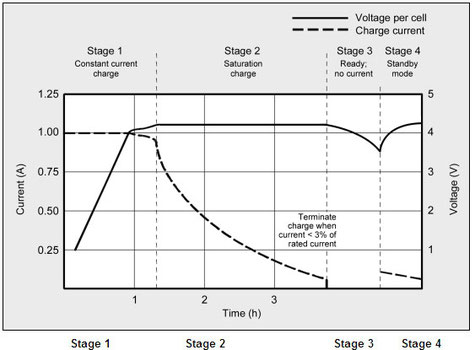

The stages in Li-Ion charging.

Stage 1 cell voltage rises as a constant current is applied to the cell

Stage 2 Voltage peaks ( 4.2v) current start to decrease

Stage 3 Charge terminates - usually as current drops to 3% of rated current.

Stage 4 some chargers apply occasional topping up current

Cells are normally charged at 1/2 or fun value of their rated output. This maximises cell life and keeps the temperature rise to under about 5 degrees centigrade (9 degrees F) during charging.

Increasing the charge current does not hasten the full-charge state by much. Although the battery reaches the voltage peak quicker, the saturation charge will take longer accordingly. With higher current, Stage 1 is shorter but the saturation during Stage 2 will take longer. A high current charge will, however, quickly fill the battery to about 70 percent.

Li-ion does not need to be fully charged, nor is it desirable to do so. In fact, it is better not to fully charge because a high voltage stresses the battery.

Choosing a lower voltage threshold or eliminating the saturation charge altogether, prolongs battery life but this reduces the battery runtime.

Chargers for consumer products often go for maximum capacity and cannot be adjusted; extended service life is perceived less important.

Some lower-cost consumer chargers may use the simplified “charge-and-run” method that charges a lithium-ion battery in one hour or less without going to the Stage 2 saturation charge. “Ready” appears when the battery reaches the voltage threshold at Stage 1. State-of-charge (SoC) at this point is about 85 percent, a level that may be sufficient for many users.

|

Charge V/cell |

Capacity at |

Charge time |

Capacity with full saturation |

|

3.80 3.90 4.00 4.10 4.20 |

60% 70% 75% 80% 85% |

120 min 135 min 150 min 165 min 180 min |

~65% ~75% ~80% ~90% 100%

|

The table above shows the effect of limiting the charging voltage on the calculated capacity. Thus using 3.8 volts cut off charging voltage results in just a 60% capacity. Raising the charge voltage to 4.0v delivers 75% capacity and at the full rated 4.20v the cell attains 100% charge after the saturation charge period.

Li-ion cannot absorb overcharge. When fully charged, the charge current must be cut off. A continuous trickle charge would cause plating of metallic lithium and compromise safety. To minimize stress, keep the lithium-ion battery at the peak cut-off as short as possible.

Once the charge is terminated, the battery voltage begins to drop. This eases the voltage stress. Over time, the open circuit voltage will settle to between 3.70V and 3.90V/cell. Note that a Li-ion battery that has received a fully saturated charge will keep the voltage elevated for a longer than one that has not received a saturation charge.

Lithium-ion operates safely within the designated operating voltages; however, the battery becomes unstable if inadvertently charged to a higher than specified voltage. Prolonged charging above 4.30V on a Li-ion designed for 4.20V/cell will plate metallic lithium on the anode.

The cathode material becomes an oxidizing agent, loses stability and produces carbon dioxide (CO2).

The cell pressure rises and if the charge is allowed to continue, the current interrupt device (CID) responsible for cell safety disconnects at 1,000–1,380kPa (145–200psi).

Should the pressure rise further, the safety membrane on some Li-ion bursts open at about 3,450kPa (500psi) and the cell might eventually vent with flame.

Lithium-based batteries should always stay cool on charge. Discontinue the use of a battery or charger if the temperature rises more than 10ºC (18ºF) above ambient under a normal charge. Li-ion cannot absorb over-charge and does not receive trickle charge when full. It is not necessary to remove Li-ion from the charger; however, if not used for a week or more, it is best to place the pack in a cool place and recharge before use.

source - the battery university http://batteryuniversity.com/learn/article/charging_lithium_ion_batteries

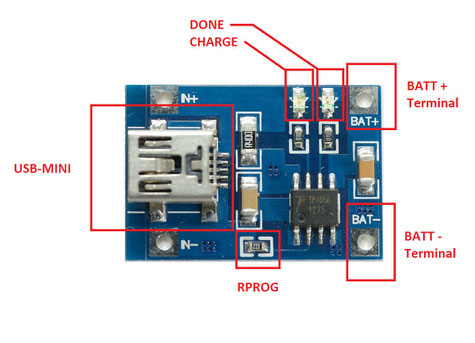

Fortunately there are many single, double or triple Li-Ion charger pcbs available

This one using the TP4056 chip takes a 5v USB input and provides the charging current (set by RPROG) resistor. The chip has all the pre-condition charge and saturation charge to cut off voltage built in.

There's an interesting article written by Anna Kučírková about the history and the future direction that lithium batteries might take.

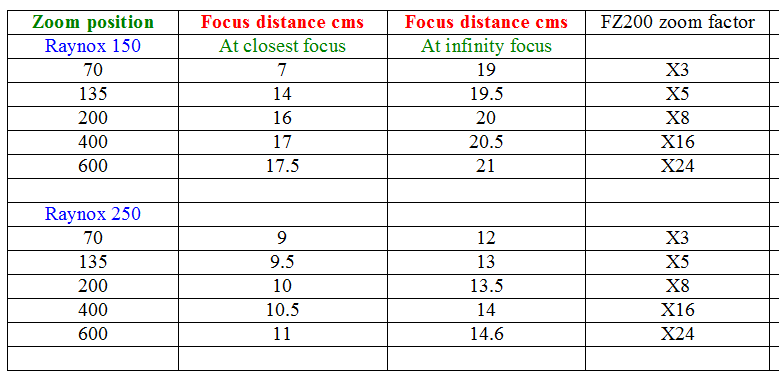

Polaroid 250D Achromatic Close Up Lens

I've been testing this lens as a cheaper alternative to the Raynox 150 triple element achromatic lens. The Raynox lens has a small rear lens element and causes vignetting with the FZ200/300/330 if not used at beyond x4 zoom. The 52mm Polaroid lens fits directly to the lens and can be used from the widest zoom setting without any vignetting. Whilst this may not be a consideration if you are using the lenses for larger magnifications at full x24 zoom the Polaroid has the advantage that it can be used at closer distances to the subject. Image quality wise, in my brief testing the Polaroid lens does seem to have better resolving power giving sharper looking images however this is subjective and marginal. So if you want a lens which will image from x1 zoom without any vignette then this is the lens to choose.

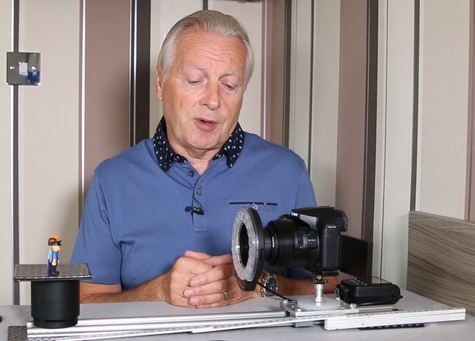

To establish the actual working distance between the front face of the Raynox lens and the subject I placed the camera and lens on a sliding plate and then for each zoom position measured the distances at both the closest focus and infinity focus positions.

Fo consistent lighting I used the Mcoplus LED ring light but did not place the controller on the hot shoe so that the camera remained in TTL metering mode thus as the lens became nearer to the subject the exposure was compensated.

To give the scale of the amount of magnification shown in the video this is the subject used. The models are 4cms tall and the heads are 1cm across the faces

A sample of the illustration from the video

Using the Raynox 150 lens at 200mm (x8 on the FZ200) with the focus set manually to the minimum setting the camera focussed at 16cms. At Infinity focus the distance increases to 20cms however there is only slight change to the overall magnification.

The Raynox lens removed from the usual mounting clip and fitted to the camera with a 52mm to 43mm step down ring

amazon.com http://amzn.to/28S1Per

amazon uk http://amzn.to/28OWku4

This ensures the lens is as close to the main lens as possible and maintains the central optical axis and parallel focus plain.

The tabulated results from the Youtube video

DIY Light Meter Project Update

I started a project a couple of years ago to re-kindle my love of electronics and microprocessor programming. I thought this was a good way to keep my brain active and help prevent old age related problems with memory loss etc.

I must admit is was hard to find sufficient time to enjoy this activity as, if anyone has tried programming will tell you, it takes a considerable amount of time to learn again and get projects working. I created the first light meter using a few components that I had already and it is shown below

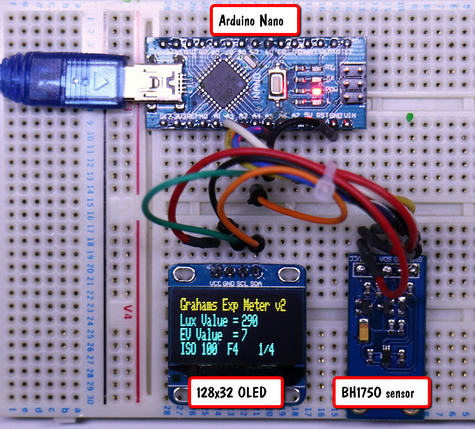

Since I am working towards producing a controller to allow Canon EOS EF/EFS lenses to be controlled by a microprocessor I needed to get my programming back up to a reasonable level as this project would require some pretty challenging coding. I thought an update to the lightmeter might be a good way to start. I looked at updating the project to use the kind of protocols that would be needed for the lens controller and found some small OLED displays which had the I2C communication. I started the project again with a view to miniaturizing the original unit and getting the new protocol coding understood. The new version is shown below.

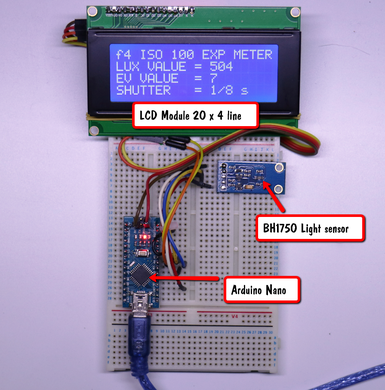

This is the original unit which used a 20 character by 4 line LCD display.

Whilst it does include an I2C interface to drive the LCD display it becomes quite a large unit and that i why I never finished the project off and put it into a suitable box

So the new unit uses the same Arduino Uno microprocessor and the same BH1750 Light Sensor module (which also uses I2C communication) but now includes the 128 x 64 dot OLED display.

The display doesn't have a unique address, which is unusual as I2C devices are normally provided with a unique (or a couple of addresses). Fortunately the BH1750 does so I was able to put both devices on the I2C bus and since the sensor had a unique address it was possible to communicate with it whilst the OLED ignored the codes on the bus. For a more serious project this would be needed to be resolved as it could end up with garbage being displayed at some point if the OLED controller latched onto one of the BH1750 writes!

Th unit reads the light value in LUX from the BH1750 sensor and then I convert this to an EV value and finally use this value to provide the exposure using ISO 100 and F4 to get the shutter speed.

If I need any other aperture or shutter combination then its just simply just a case of interpolation in my head to get these. For example the GX8 only starts at ISO 200 so with this unit I would simply halve the shutter speed or use the next larger aperture which would be 1/8 at F4 or 1/4 at F5.6 in the case of the display shown in the adjacent image.

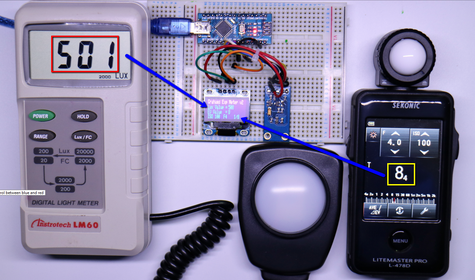

I checked the accuracy of the unit using both a Lux light meter and my Sekonic light meter (which I use for flash photography) and it was well within 1/3 F-stop (as I don't use a EV to exposure equation only a look up table in the design).

I was quite pleased with the outcome so far.

If I can find the right equation to calculate shutter speed from EV then I'll revisit the project to provide 1/10 F-stop accuracy.

I believe EV = log(base2)((NxN)/t) would give it if I transformed the equation to give t ( shutter speed in seconds) where N is the aperture however my maths knowledge is too far in the distant past to allow me to get the right transposition for "t".

Update

Thanks to another reader (Phil Carroll) I now have the correct transformation of the equation

The transformation you need is fairly easy with a little algebra. First:

X = log k (N) <==> N = k ** X

Therefore

EV=log(base2)((NxN)/t) <==> (NxN)/t = 2 ** EV

Therefore

t = (NxN) / (2 ** EV)

which becomes: (by algebraic transposition to make computation easier on simple processors):

log2(t) = log2(F^2) - EV

And therefore:

log2(t) = log2(F) + log2(F) - EV

Extreme Macro with Reverse Mounted Lenses on Canon EOS Cameras

To get extreme magnification it is possible to use a standard "kit" lens and mechanically mount it "the wrong way round" on the camera body. To do this with lenses with manual aperture control (like the FD series) requires just a simple adaptor which has the EF mount on one side and a suitable size filter thread ring on the other side. However, to do this with electronic aperture control lenses needs a special adaptor cable which allows the lens to be controlled by the camera body as though it was correctly mounted.

The Meike MK-C-UP adaptor (Amazon) has been designed for this purpose. It can also be used to allow the use of the cheaper non auto extension tubes or even bellows for lenses mounted in the traditional way.

Magnification will depend upon the focal length of the lens used. At the 18mm end of the standard kit lens the magnification is extreme and the working distance is very small. Lighting the subject becomes a real issue in this mode. At 24mm the working distance becomes about 25mm (1 inch) and allows some lighting possibilities. At the 50mm focal length the working distance has extended to about 40mm (1.5inch).

I've found the best way to work is as follows. Set the lens to the focal length (say 50mm). Set the lens at infinity focus (lens focus ring fully counterclockwise) and turn image stabilisation off. The camera is better controlled if you use a slider plate to allow small focus distance changes. Focussing is done by moving the camera relative to the subject (or moving the subject relative to the camera). Similarly the subject is best mounted so the position can be controlled. For small, flat objects, I attach them to a small slider on a table top tripod to allow the vertical position to be set precisely. Use live view mode and mirror lock up if your camera supports it to allow images to be captured with no camera shake as the mirror flips up. Use an aperture like F16 ( smaller tends to give image softening through diffraction) and use the camera self timer to execute the exposure.

In addition to reverse mounted lens you can also use the kit to capture macro images with lenses mounted in a convention away and insert the body sections of extension tubes from non-autosets (use the Meike brand to ensure correct thread) or you could even use bellows between the lens and the camera body.

Using OM lenses on Micro Four Thirds Cameras - Is There A Loss Of Quality?

I have recently been working on a project to use Canon EOS EF/EFS lenses on my micro four thirds bodies and in particular the GX8 with a 20M pixel resolution. At the moment it is in prototype stage but I had read recently that using full frame lenses on cropped sensors leads to a loss of image resolution.

This has been circulating for some time now on the popular camera forums. Basically the theory goes that lenses designed for 24M full frame cameras do not resolve sufficiently when applied to a higher pixel density as found on smaller sensors like the MFT. It is further expounded that many full frame lenses do not have sufficient resolving power for the newer higher pixel count full frame sensors - the result being very soft looking images.

The obvious question I had to ascertain for myself was just how bad do these lenses perform when used to form an image on a micro four thirds sensor and would it be better to just buy the required focal length lens designed for micro four thirds instead?

I set up a little test to give me some indication as to the image quality that I would get from using 3 of my favourite EOS lenses - the 70-200mm F4L IS lens, the 100-400 F4L IS lens and my 100mm F2.8 macro lens. I would compare this to the image quality of a 100-300mm micro four thirds lens.

I took the reference images on the micro four thirds camera and then the same reference images on the Canon 60D before mounting them on my Commlite EOS-MFT Active adaptor.

Taking into consideration the 2x crop factor of the micro four thirds system I tried to get the same image magnification for each test shot.

Here are some of the resulting images.

Using the Panasonic GX8 with the Commlite active adaptor I captured a series of images using the canon 70-200mm F4 IS L series lens and the Canon 70-300mm F3.5 F5.6 IS lens and a few sample images are shown below.

Now I don't think the image quality is all that bad despite some sites warning that shooting full frame lenses on micro four thirds systems leads to very soft images!

....to be continued!!!

Panasonic Bridge Camera and Aspect Ratio Equivalent Focal Length Change

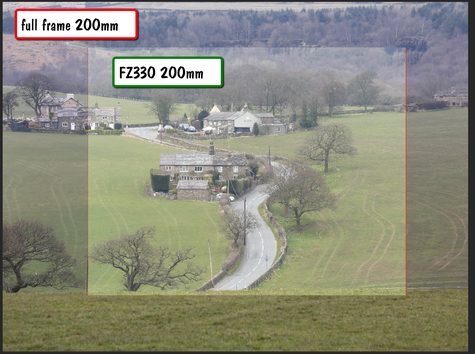

I made a statement in a previous newsletter about photographing the moon suggesting that if you use the 1:1 aspect ratio you would be using a much longer focal length. This was based upon the specifications published on the Panasonic Website. Here's the sample from the FZ330/300 camera.

As you can see if you select the 1:1 aspect ratio the quoted equivalent 35mm focal length becomes 30 - 720mm.

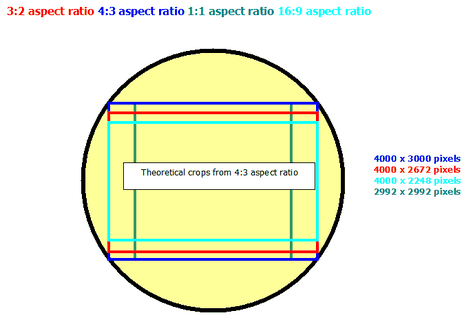

After some feedback from you about this I verified the situation and found that the image size at 1:1 was exactly the same size as the one at 4:3, 3:2 and 16:9. There was clearly no change in angle of view of the lens or in its magnification. That led to a lot of mathematics to prove how the results were established by Panasonic and further investigation to prove that this marketing specification was at least misleading to consumers. Here's what I have deduced.

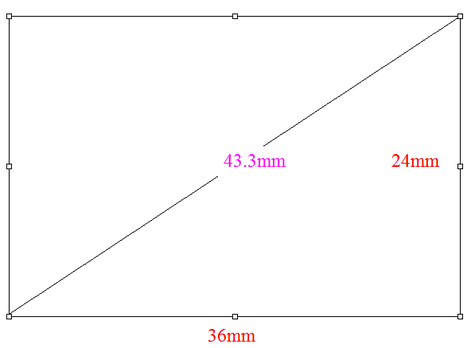

First we are talking about equivalent 35 (or full frame) focal lengths and we must understand how these are derived.

The principal of the crop factor is using a comparison of the diagonal of the "cropped" sensor to that of the 35mm diagonal (43.3mm).

By using Pythagoras theorem the diagonal is found by taking the square root of the sum of the width squared plus the height squared.

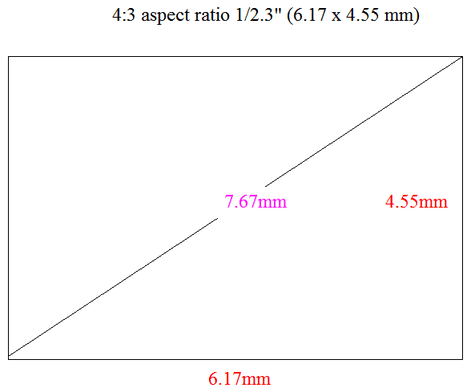

Using the same formula for calculating the diagonal of the sensor within the FZ300/330 (a 1/2.3" sensor) we arrive at the calculation of 7.67

Now this is not the same aspect ratio as 35mm of full frame sensors. However I haven't found a manufacturer who applies the correct adjustment for this in there calculations so I haven't addressed it here.

To arrive at the "crop factor" dividing the 43.3 by 7.67 results in numerical value of 5.65.

Now if we look at the native focal lengths of the FZ300/330 we see it is 4.5mm to 108mm.

To establish the "Equivalent 35mm" focal length if we multiply these by the 5.65 factor we end up with 25.4mm and 610mm at the telephoto end. The variation between the claimed 600m and the theoretical 610mm may be accounted for in the fact that the whole imaging area is not used. There are usually ring or guard pixels around the edge of the image which would affect the size calculation

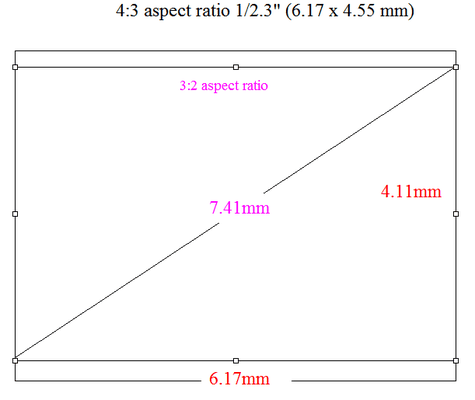

When looking at the 3:2 cropped area from the whole sensor we end up with a diagonal of 7.41mm which results in a calculated crop factor of 5.84. At the telephoto end this would result in an effective 35mm focal length of 108 x 5.84 = 631mm. Again the discrepancy between the calculated value and listed value is due to the actual area used.

we can repeat this for the 16:9 aspect ratio and end up with an effective focal length of 661mm

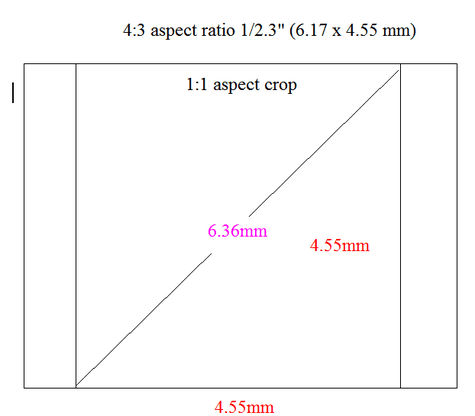

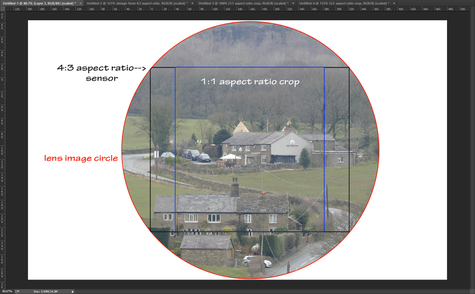

I'm more concerned about the 1:1 aspect ratio where I suspect this appliance of the diagonal formula fails to provide the real world results.

With the 1:1 crop the pixels are not 3000x 3000 in this camera but 2994 x 2994 so this does give a very slight crop.

Working through the calculations again we end up with a crop factor of 6.8 and an effective focal length of 735mm

So that's how the CIPA guidelines to manufacturers specify how crop factor is derived. I suspect that this only applies to the whole sensor and not as Panasonic are doing here in applying it to the aspect ratios.

In the next section I'll look at the lens imaging circle and angle of view and show why the calculations are invalid.

If we look at a typical 4:3 camera sensor, like the FZ300/330

The 4:3 aspect ratio is the largest area that has to be covered by the lens "image circle". When a lens is designed it must have an exit element which is large enough so that it does not cause any vignetting of the image.

Any optical lens has an associated "angle of view" and when the focal length of the lens equals the diagonal measurement of the sensor it is said to be a "normal" focal length. A view which is narrower than this is termed "telephoto" and wider referred to as "wide angle".

Thus a full frame camera with a 43.3 mm diagonal usually has a lens of 45 - 50mm as the "normal lens". The FZ300/330 with a 1/2.3" sensor having a 7.66mm diagonal has a 8mm "normal" focal length.

A lens designed with a specific focal length (or in the case of a zoom lens, with adjustable focal length) at any set focal length will produce the same angle of view when imaged on the sensor format it was designed for. In the illustration, left, you can see the overlaid images from a 200mm lens on a full frame camera and the fz300/330 set at 200mm the views are virtually the same from each camera!

However if you take the lens intended for the full frame camera and mount that on a camera with a different size sensor then the image produced by that sensor will have an an "equivalent" focal length that is the lens focal length multiplied by the sensor crop factor. Thus mounting a 400mm FF lens on a micro four third system give an equivalent focal length of 800mm because of the 2x crop factor and if used on a the Pentax Q with its 1/2.3" sensor gives over 2200mm because of the 5.6 crop factor.

In this illustration of a simulated image from the lens optics falling on the FZ300/330 sensor (ok it should be upside down to be absolutely correct!) the lens is set to its maximum focal length of 108mm (although the actual focal length does not matter).

The lens makes an image circle which is just wide enough to fill the 4:3 native aspect ratio of this sensor. If we took a picture at the 4:3 aspect ratio we would get the image outlined in black.

Now if we select 1:1 aspect ratio from the camera REC setup menu and took a picture it would be the one represented by the blue outline.

The two images have exactly the same angle of view, there is no change in the focal length, or the equivalent focal length of the lens.

The only way the image would have a different angle of view, and therefore a different equivalent focal length, is if we allow a crop from the sensor which is made by cropping the same degree from the horizontal as well as the vertical size of the sensor and then doing a software interpolated image which is then scaled up to the original sensor dimensions. This is how the i.zoom function works, once the full optical zoom has been reached the processor starts to crop into the image and then immediately re-scales it back to the full pixel dimensions and that is why there is a 2x limit applied otherwise there would be too much image degradation. Digital zoom just scales the whole image and can be applied even after i.zoom has been applied to get some really crazy magnification (if not horrible) images.

So what do I conclude here?

Well the specifications, in my opinion, are totally irresponsible and give the prospective purchaser a false hope that the came will give an extended zoom if the camera is set to the 1:1 aspect ratio. It clearly does not!

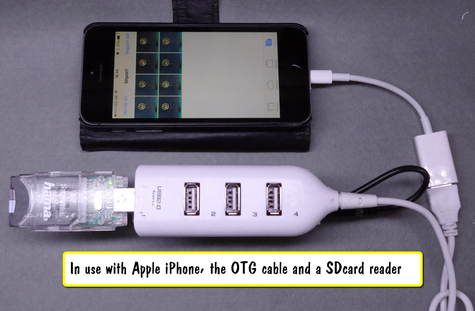

Overcoming the Power Limitations of Bus Powered USB Hubs

If you want to use high power peripherals on you iPhone, iPad or Android phones you will probably be greeted with a message saying that it is not possible for the device to use that peripheral for power reasons.

Whilst there are several USB hubs with 5V input to provide additional power they tend to be supplied with mains power units which are not a lot of use if you want to use these peripherals away from a mains outlet socket. Some have a 2.2mm socket and you could purchase a USB to 2.1 plug adaptor and use it with a 5v USB power bank. But I thought of modifying one of the very cheap USB 2.0 hubs easily available on Amazon and Ebay. Here's how I did it.

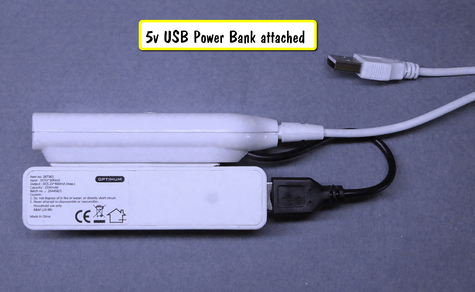

This is the completed project with the generic hub modified with a 5v USB 2100mAh power bank attached.

here the hub is providing additional power for a SDXC card reader and the iPhone is displaying the contents of the SDXC card plugged into the reader. The iPhone is connected to the USB hub by Apple's OTG (On the go Cable)

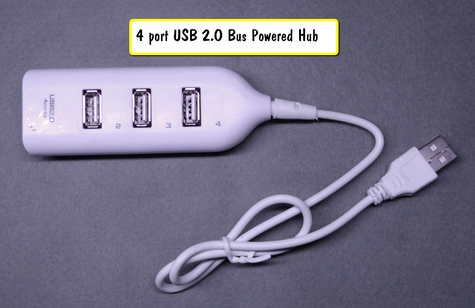

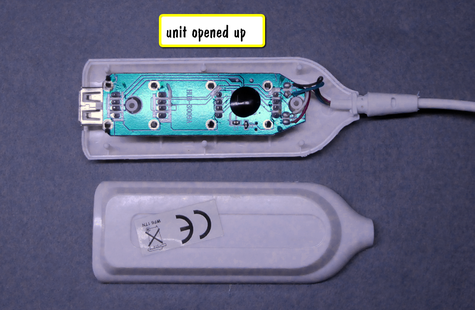

This is the generic 4 port bus powered hub from Amazon before the modification.

By carefully inserting a knife blade between the upper and lower sections of the unit it is fairly easy to disassemble the unit without to much damage. The upper and lower units are held on 46 plastic pins and a very light adhesive along the seams.

In the base of the unit I drilled a 4mm hole through the case adjacent to the moulding for the existing cable strain relief.

By using a Dollar store / £store cheap USB charging lead (any type as the end is going to be cut off) cut off the phone end and lead about 150mm (6 inch) of cable. Strip the outer sleeving revealing the black and red inner leads ( if you get a data sync cable cut off the white and green conductors as they are not needed).

Strip off about 6mm (1/4 inch) from the insulation of each conductor, tin the leads using a small soldering iron and resin cored solder.

Push the cable through the hole and solder the leads to the PCB lands which are the +5v (red) and 0V (black). You could solder direct to te existing red and black leads if you wish or to the 5V and 0V pins on any of the other usb sockets. Attach a small cable tie to the cable to act as a strain relief just near to the hole in the lower shell

To complete the attach the small usb Power bank using double sided adhesive foam tape. Connect the USB cable from the modification to the Power bank. Without a load the unit draws virtually no current so it can be left attached When a peripheral is plugged into the USB hub the Power Bank will automatically provide power (usually indicated by a blue light coming on inside the unit). Connect the original USB cable to the OTG cable and then plug it into your smartphone/iPad etc to enjoy your higher current peripheral

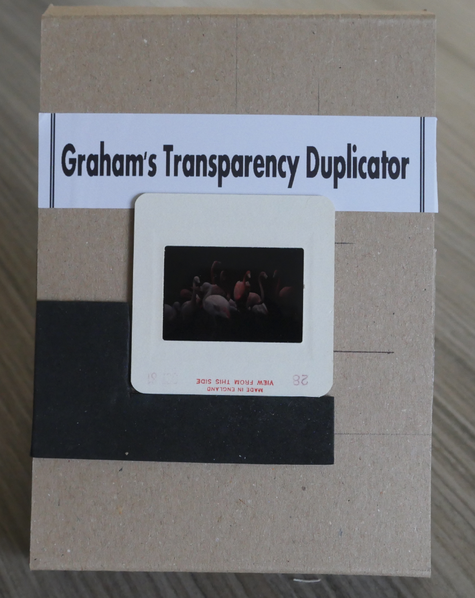

Easy Slide Duplication with a Digital Camera

We all may have numerous 35mm transparencies stored somewhere in the house, reminiscent of the time when only film was available to capture our timeless images.

There are commercial ventures which will scan these onto DVD for you at a reasonable cost. There are also dedicated slide scanners or attachments for fitting to a flat bed scanner which would allow you to do this yourself.

I have used dedicated scanners and attachments with varying degrees of success however, I wanted to capture a few more images from slides which I recently found in the garage after a long needed clear out. Unfortunately the storage of them, for many years, has been far from ideal and the dreaded mould had began to eat away at some of the emulsion looking like fine hairs on the image.

Capturing the image of a transparency could be done by projecting the slide onto a white sheet of paper and photographing it.

This would work but would suffer from distortion as the camera would not be on the same axis as the projector and would be keystoned one way or another dependant upon the camera position.

It is also likely to give less than perfect results as you are using another lens system to form the image to be captured and finally white balance might be an issue.

So I started to look at building a simple rig which would allow the back projection of a white light source through the transparency so that I could focus my camera onto the face of it and hence capture that image.

I wanted to keep it as simple as possible so that it could be replicated if you wanted to try this yourself.

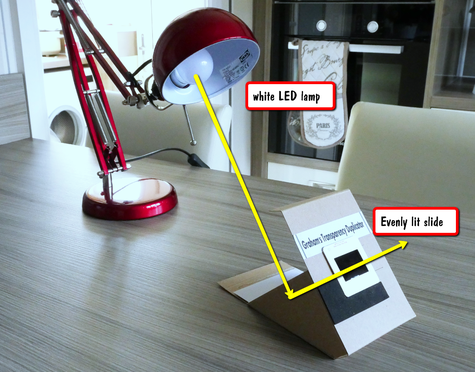

So in essence this is the set up I developed to capture my transparencies.

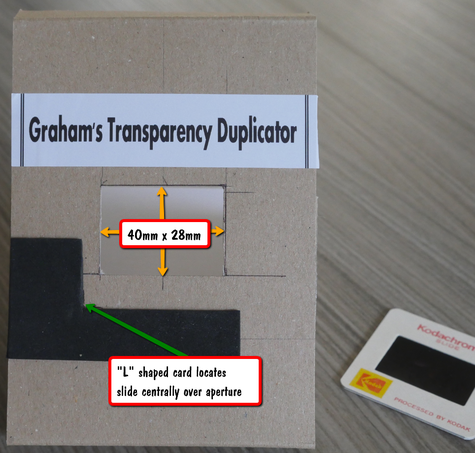

A simple rig made of the first piece of cardboard I could find to hold the transparency at 45 degrees to the table.

The front face of the rig has an aperture cut in it which is 4mm oversize 40mm x 28mm.

Light from a table lamp (or could be daylight or flashlight) is bounced at 45 degrees from a white card in the base of the unit. This acts as the diffuser and even backlight to the slide.

The transparency is located centrally over the aperture in the face of the rig and a "L" shaped cardboard strip is glued at a position that allows the transparency to be aligned into the corner of it this centralising the slide in the aperture.

Details of the aperture cut out in the front of the rig which measures 100mm wide x 160mm high ( 4inch x 6 inch)

The "L" shaped card is glued to provide accurate location of each transparency as it is being captured by the camera.

The base of the rig has a white card (I used a matt surface card) to act as the backlight for the transparency.

The front of the rig is held at 45 degrees by gluing cardboard side pieces which also stiffen up the unit.

To capture the images of the transparencies place don this unit I used my Panasonic FZ330. Although this camera will focus close enough to capture the image it really is difficult to operate at this distance (about 1.5 cms or 1/2 inch). Also I noticed quite a bit of barrel distortion. So to overcome this I used a Raynox 150 macro lens with the camera zoomed to 200mm set at 3:2 aspect ratio. the working distance was 160mm from the from of the Raynox lens giving ample room to place the transparencies for capture. Once I had the correct position for the camera on the tripod I stuck the rig to the table with a couple of pieces of self adhesive tape to prevent it moving.

Without anything in the aperture I switched on my desk lamp ( fitted with a 5w Daylight LED lamp) and performed a manual white balance.

I took a transparency which had good exposure as my test image and set the aperture to F4 and the ISO to 100 in the Manual mode and then adjusted the shutter speed to give the correct exposure ( about 1/10 second with my lamp at about 6 inches from the card reflector. Once I had the image looking right no further adjustment of the exposure should be made, and don't use auto mode.

Use the two second timer on the camera or a wired cable release or the Lumix app on a smartphone to trigger the shutter. The camera can be left on autofocus and this will allow for any difference in thickness of slide mounts

The only critical thing here is to maintain the camera central on the transparency and keep the camera back parallel to it to prevent distortion. It also ensures edge to edge and side to side focus is accurate

Working with this rig it is possible to capture about 5 images per minute or 300 per hour. Use the electronic shutter to reduce vibration and camera shake on the tripod.

You could use any camera here, micro four thirds with an extension tube set or macro lens attached to the camera lens provided you can capture the whole image (with a slight crop to remove the edges of the transparency holder) at a reasonable working distance.

Some more examples from using this method are shown below.

4k Photo and Video Shooting with Panasonic Cameras

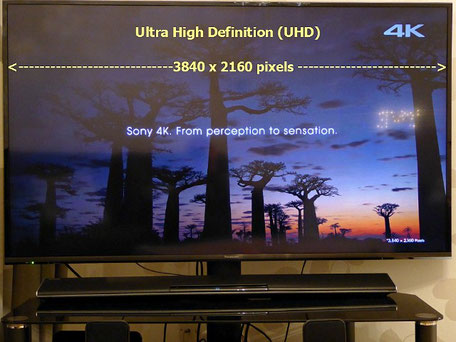

Ultra High Definition (UHD) or more commonly known as 4K is the newest standard for current television models.

Simply put 4K refers to the horizontal width of the screen expressed in pixels. True 4K would have 4096 pixels for each horizontal line however the industry has decided to utilise a standard which has 3840 pixels and is referred to a UHD or Ultra High definition.

The step from SD to HD saw a four fold increase in the number of pixels rendered in a screen image. UHD extend this by a factor of 4 again. We all saw the benefit of material captured in HD (1920 x 1080) if we displayed it on a HD capable display however the effective 4x oversampling meant that these images when displayed on a standard definition screen looked so much crisper with lots of detail. UHD is having the same impact to those who have seen a true UHD display or UHD material displayed on a 1080 HD screen.

At the moment most 4K content is only available via web based streaming services such as Youtube. However this does allow you to sample the benefits of 4K

The Panasonic Lumix FZ330 records 4K video (3840x2160, 25p/30p), as well as 4K photo at 30fps producing 8-megapixel images. The three options when shooting 4K photo are:

4K burst (30fps), records while the shutter is pressed

4K burst Start/Stop, records after you've pressed the shutter release button and stops when you press it again

4K Pre-burst shooting, the camera records images before and after you've pressed the shutter release.

So why do we as photographers want to shoot with this new 4K video mode or the 4K photo mode?

Well the first advantage is the rate of capture that we can achieve, burst modes on most cameras are typically 5-8fps

Most consumer digital cameras are only able to shoot 5-8 images in a second, which is much slower than 4K.

You might get one or two good images through practice and timing, however to obtain several images in the same sequence is something of a holy grail.

In contrast shooting in 4K modes for every 1 second of 4K video you get 30 images. With so many images in a sequence of shots your chances of getting more "keepers" goes up considerably.

With 4k shooting we are shifting huge amounts of image data between the sensor and the SDXC storage card. This requires that there must be no bottlenecks in our workflow that will cause the file to fail to write completely glitch free.

We have seen in previous articles here that we need to have a storage card with the latest U3 classification rating in order to have some headroom in data transfer. Fortunately the price of these cards keeps reducing and its now possible to own 64GB versions of these cards specifically for video shooting.

Storing all that data for editing on your computer will also mean a fast, high-capacity hard drive. You'll need at least a 7200rpm conventional hard drive on a USB 3.0 or serial ata (SATA)

connection.

Shooting in 4k varies from camera model to camera model.

Some like the FZ1000 with the firmware update(2.0) allow video to be captured in any photo aspect ratio rather than just the default 16:9 hd video format. From this video clip the desired frame can be extracted in camera or later in photo editing.

Cameras like the FZ330 (opposite) have in addition to this video mode the three dedicated photo shooting modes.

4K burst (30fps), records while the shutter is pressed

4K burst Start/Stop, records after you've pressed the shutter release button and stops when you press it again

4K Pre-burst shooting, the camera records images before and after you've pressed the shutter release.

From the image sequence captured again the image(s) can be selected and saved as individual JPEG images.

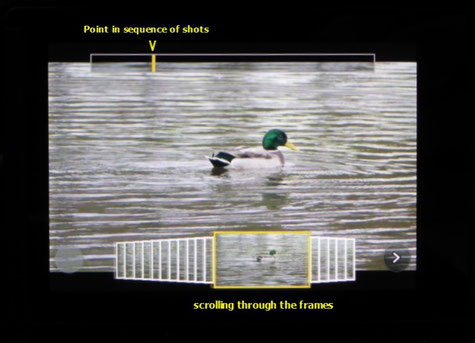

With the FZ330 in the dedicated 4K photo mode the exact frame can be selected by scrolling through the sequence until the desired point of the action is required

Once the point is selected it is saved as a separate image on the memory card

The extracted image from the 4K dedicated photo shooting mode of the FZ330

Of course acquisition in 4K video or photo is really only the beginning of your workflow, especially if you are embracing 4K video.

4k photo mode needs to be taken in the same context as shooting regular still frames.

You still need to consider ISO and light levels. Shutter speed is normally set to twice the frame rate for smooth looking video however for still images this will lead to subject motion blur.

It is essential to ensure that although the frame rate is 30 fps the shutter speed needs to be set to give images within the sequence shot to be blur free.

This is best determined by the 1/focal length rule. So at wide angle 1/30sec is sufficient but at the long end of the telephoto lens we need to be using at least 1/500 sec, even allowing for the OIS of the camera.

Video workflow is something that I will be covering in a future article as unless you have a video editor that can do "proxy" editing whereby it uses a smaller resolution file to edit and then this information is used to do the final 4K footage editing then you will run into performance issues with many of the pc systems current out there

The FZ1000 and the 4K Video Stills Acquisition.

Using the 4K video mode implemented in the FZ1000 it is remarkably easy to extract a 8 Megapixel still from the video - either in camera or in post processing with Photofunstudio software. With version 2 software in the camera you can now actually record the video at the aspect ratio that you want the still picture to be in rather than the usual 16:9 aspect ration of HD video.

Shooting at 24 fps it allows you to finely select the part of an image sequence to get the perfect moment in an action sequence. Providing you have the shutter speed set to give a recording which doesn't capture subject motion blur then the images are indistinguishable from a still image. In the image sequences below you can see the actual frames from a video shot in this way. The individual frames of course could be post processed if necessary. Click for larger view

Cheap USB table top lighting project

I need to photograph lots of small objects for the blog and other projects so a small, easy to set up light source is required. I have several large LED light panels however this requires I get out a light stand to set them up. I needed something that could be hand held if necessary and be quick to set up and use. I found a really cheap USB LED lamp sold in the UK Poundland stores ( but it is available on Amazon http://www.amazon.co.uk/COOLEAD-5V-Camping-Light-Emergency-White/dp/B00RVGMKL8/ref=sr_1_13?ie=UTF8&qid=1447701142&sr=8-13&keywords=usb+light). It has a very good colour rendering index and runs only slightly warm and can be powered from a computer USB port but better from a USB charger or power bank.

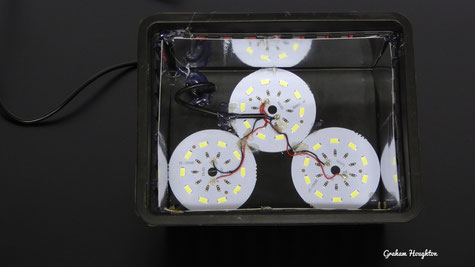

To achieve a broad light output with sufficient power I decided to put 3 of these lights into one unit.

The actual light element consists of 10 surface mount LEDs on a circular PCB. I found a suitable plastic basket to hold 3 of the discs (again Poundland)

I began by opening the bulbs - the diffuser just pops off and the just cut the wire allowing enough to be soldered in the box. (alternatively you could bring the three connections out of the box and connect all three leads together)

I mounted the disks inside the box using hot melt glue on the back of the disks

The three pcbs were soldered in parallel and one connection with the USB plug was brought out through the plastic box via a rubber grommet. A simple knot in the cable acting as a cable restraint to stop the wire being pulled off the pcbs. Hot melt glue to hold the wiring in place.

To reflect light back into the unit rather than escape out through the sides of the plastic basket I added some silvered card to act as the reflector.

To provide a little diffusion to the LED point light sources a fastened a small translucent carrier bag over the front of the basket with elastic bands. The power for the unit coming from a small USB 8400mAh power bank

A sample image taken with the TZ70 using this light source at about 1/2 metre away from the model

1/4 sec F5.5 ISO 200. No other light in the room (total darkness)

Understanding The Speed Rating Of SDHC and SDXC Memory Cards

There are wide discrepancies in memory card transmission speeds depending on the SD memory card manufacturer and brand. Varying speeds make it difficult to determine which card will provide reliable recording of streaming content. Recording video require a constant minimum write speed to ensure a smooth playback. The SD Association defines Speed Class standards indicated by speed symbols to help consumers decide what card will provide the required minimum performance for reliability.

The SD Speed Class and UHS Speed Class rating symbols indicate minimum writing performance to ensure smooth writing of streaming content such as video shooting. This is important mainly for camcorders, video recorders and other devices with video recording capabilities.

Speed Class designates minimum writing performance to record video. The Speed Classes defined by the SD Association are Class 2, 4, 6 and 10. The number refers to the minimum MBs write speed of the card.

UHS Speed Class is designed for UHS devices only and designates minimum writing performance to record video on UHS products. This equates to recording 4K in Panasonic cameras.

The UHS Speed Classes defined by the SD Association are UHS Speed Class 1 (U1) and UHS Speed Class 3 (U3).

U1 guarantees a 10MBs write rate and U3 a 30MBs write rate

So how can we use this data to work out what card is required to shoot video in our camera. Camera manufacturers tens to specify not the MBs (megabytes per second) but Mbps (megabits per second).

As an example the FZ330 records AVCHD video at 28Mbps. To get the real bit rate in MBs we need to divide this number by 8.

So 28Mbps = 3.6 MBs and that's why Panasonic only recommend a memory card of at least class 4 (4 MBs) to record video.

When it comes down to recording 4K video the bit rate is 100Mbps so the minimum card speed rating would be 100 divided by 8 = 12 MBs.

Ideally to guarantee no data loss a card with a higher rating than this would be chosen. So U3 would be the only choice. However the 100Mbps is the absolute maximum bit rate recorded. This may only occur with a scene with lots of fine detail and some subject motion. You will probably find that a U1 classified card will work just fine as these are minimum specifications. Individual U1 cards may have anywhere from the minimum 10MBs to over 20MBs. In the case of the Kingston card shown above it is a U1 card but is quoting a 30MBs speed. If you look closely there is a tiny letter R behind it, this refers to the cards read rate which is usually a lot faster than the write performance.

One other thing that will affect write performance is the fragmentation of the memory on the card. Just like any file storage system the files become fragmented as images and video are manually deleted from the card. This leaves non- contiguous memory space and slows down the process of writing to the card. It is good practice to format the card within the camera before shooting video, in particular 4K video.

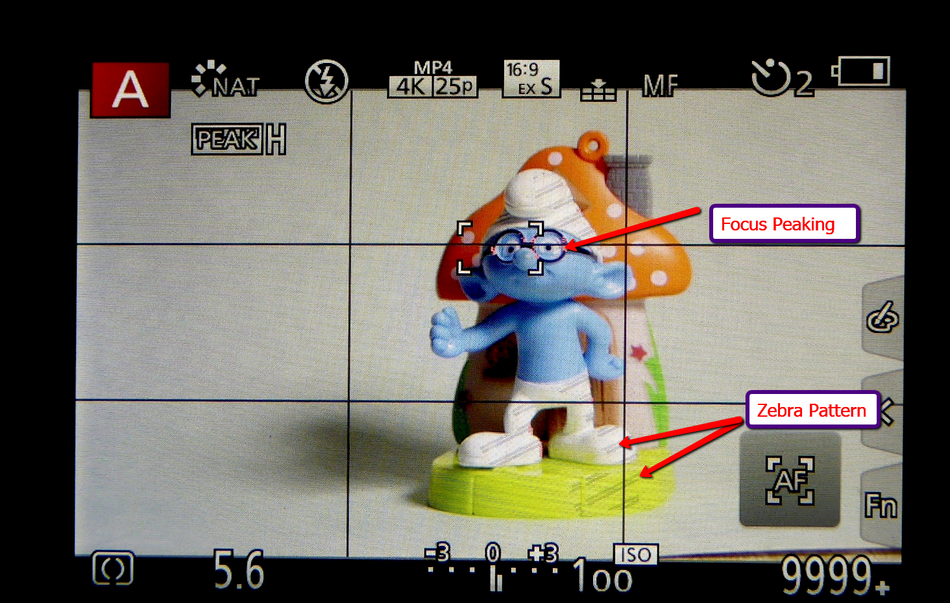

ZEBRA ZONE HIGHLIGHT WARNING AND FOCUS PEAKING

One of the great new additions to the later series of Panasonic cameras is the addition of two new aids in the

viewfinder.

The first is "zebra zones" which appear as diagonal stripes on any part of the image which is overexposed and will contain no, or very little, highlight detail.

Through the setup menu it is possible to set your own highlight warning percentage and have two preset levels available for use in different types of images. Traditionally we used the "highlight warning" after we had taken the shot to indicate those areas that are overexposed. The became known as the "blinkies" as those areas would alternately flash black and white on the image.

Of course this is retrospective and if we had only one opportunity to get that shot the highlights would have been lost in that image. With the zebra zones we see the warning before we take the shot so we can quickly adjust exposure compensation or aperture/shutter in manual mode.

The other addition is the "focus peaking" indication which shows the areas of the image which are considered in focus. It really works best on images where there is sufficient contrast for the edges to be highlighted by the process.

It is usually possible to set the colour of the highlights so that it is possible to choose one which is complementary to the subject. It is usually possible to set the level of sensitivity for the edge detection as well.#

In the above image the zebra stripes are visible showing the areas which are 95% exposed (you can set this to your own threshold value) and the pink areas are the focus plane.

The Need For Speed

With Burst mode shooting in the stills mode and 4K video recording it is now essential to use an appropriately fast SDHC or SDXC card in your camera.

Still images have a far higher resolution: a typical consumer camera may capture around 12 megapixels of detail, and high-end models often record more than 20 megapixels.

Each image may therefore contain ten times as much information as a comparable full HD video frame (1920 x 1080 2Mp)

– and because every image stands alone, jpeg compression options are more limited. Indeed, photographers wishing to capture the full tonal depth and quality of a scene will probably shoot

in RAW mode, with no compression applied. A single image captured in this way can easily require 16MB of storage or more.

If your camera produces 16MB image files, a slow write SD card will clearly be a drag on shot to shot exposures. A class 2 card will take eight seconds to record a single image. Even a fast 30MB/sec SD card will still take a good half-second to store each image onto the card.

Fortunately, this needn’t mean always waiting half a second between consecutive exposures. To implement a faster write operation, every digital camera has a buffer of very fast dynamic memory, in which images are initially stored when you press the shutter, before being written to the SD card at whichever speed the card supports. This means that even if you have a slow SD card, you can snap freely until the buffer fills up. Once it does, however, you won’t be able to shoot again until the camera frees up some space by moving images onto the SD card. The size of the buffer varies from camera to camera, but the principle is the same for all models.

The speed of your SD card, therefore, doesn’t affect how quickly you can fire off two images. It comes into play only after you’ve shot sufficient images, in a short enough space of time, to fill the camera’s buffer.

You can confirm this by putting your camera into burst drive mode and holding down the shutter so that it fires off a burst of exposures. You’ll probably find that the first few shots trigger in quick-fire succession, but then the rate slows down, as the camera can now only take additional exposures as quickly as it can write images to the SD card.

SD card speed ratings

The first challenge is understanding the relative speeds of different cards. Helpfully, all SD cards are rated with a “class”, which reflects their performance. There are four standard ratings, which you’ll see advertised as class 2, 4, 6 and 10; these respectively guarantee that the card can sustain a write speed of 2MB/sec, 4MB/sec, 6MB/sec or 10MB/sec.

The class system makes it easy to distinguish the slowest cards. When it comes to high-end cards, however, it’s useless, since a card that supports 40MB/sec will receive the same class 10 rating as a 12MB/sec card.

For this reason, manufacturers may supplement a card’s class rating with an explicit declaration of transfer speeds in megabytes per second. They may also give a speed rating as a multiplication factor, such as “100x” or “200x”. This reflects how much faster the card is than a standard CD-ROM drive with a transfer speed of 150KB/sec; a rating of 66x or above would thus be equivalent to class 10.

A 200x rating would imply a transfer rate of 30MB/sec.

Be warned that these ratings don’t have a standard meaning in the way that class ratings do. Unless the manufacturer explicitly asserts otherwise, the figures quoted on the packaging could reflect the card’s theoretical maximum read speed – rather than its minimum sustained write speed, which is the important factor for camera performance.

You may also see cards marked with a UHS-1 rating. This indicates compatibility with the relatively new Ultra-High Speed SD standard, which raises the theoretical maximum transfer speed from 104MB/sec to 312MB/sec. However, certification on its own doesn’t tell you anything about the write performance of the card – a UHS-1 certified card could be slower than an uncertified one.

The class rating system has its limitations, but it can be a handy guide to the practical capabilities of different cards. A class 4 and 6 rating means the card is guaranteed to be fast enough for Full HD video (which one you need will depend on the bit rate of the video format you’re using class 4 for AVCHD and class 6 fo MP4).

The highest rating, UHS class U3, is aimed at stills photographers and 4K video shooters. The idea is to minimise the time it takes to write a photograph to the card, so you can take multiple shots in rapid succession without having to wait around for each one to be stored.

With a UHS Speed Class 3 rating like the SanDisk Extreme PRO SDHC/SDXC UHS-II Memory Cards give you the power to capture uninterrupted, 4K, 3D, and Full HD video at a minimum sustained write speed of 30MB/s. The only caveat here is that not all cameras can utilise UHS II, the GH5 at the moment is the only Panasonic camera that can utilise it.

The Gold Series “SDUC” introduced by Panasonic can provide this UHS Speed Class 3 (U3) and is capable of stable and continuous real-time video recording at a minimum write speed of 30MB/s (240

Mbps). It can be used not only for unlimited 4K video recording in MOV/MP4 format, but is also capable of ultra high bitrate video recording at 200 Mbps (ALL-Intra) or 100 Mbps (IPB) for enabling

smooth and beautiful high-quality video without the dropouts caused by an insufficient writing speed.

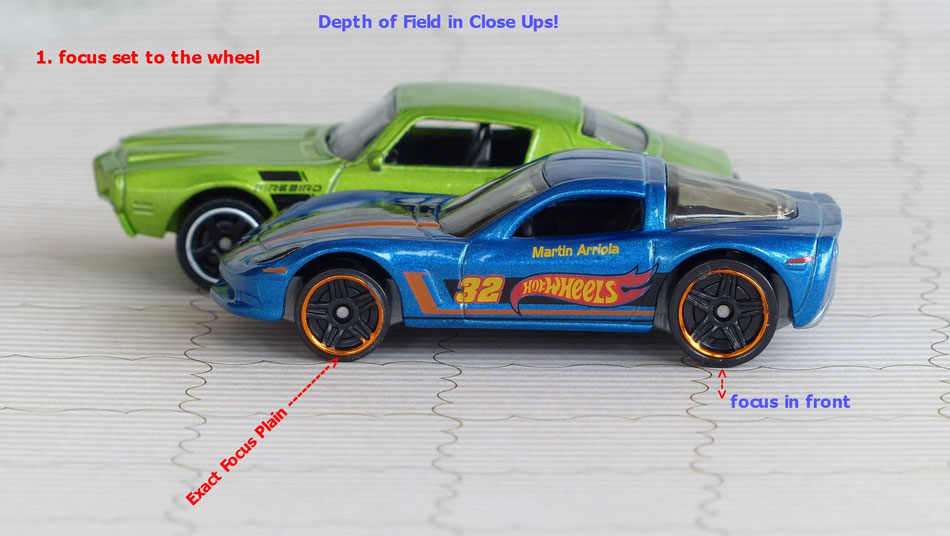

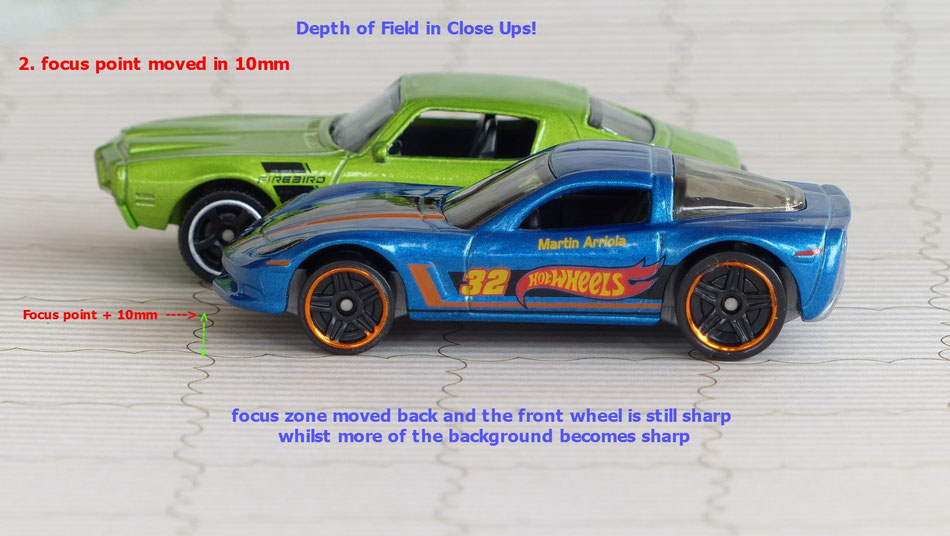

Maximising Depth of Field in Close ups

I guess we are all guilty (yes me included in this!) of setting up the camera in single point AF mode and setting the focus square

on the subject to take our shot! Nothing wrong with that except maybe we are sacrificing some depth of field in doing this. Not so much obvious as in photographing macro or close up work. In this

type of photography we need to utilise as much depth of field as we can to get front to back sharpness as sometimes we are looking at just 6mm total focus depth!

From the laws of optics we know that at a certain focus distance there is always an area in front and behind this point that will be acceptable sharp - we call if the depth of field. It will depend upon a few factors like the pixel size, the lens focal length, the camera to subject distance and of course aperture setting. If we are photographing a subject close up quite often the front of the subject is where we focus our camera at. If there is nothing in front of this point then the zone that was in front of this point plays no part in forming the resulting image and is therefore wasted. If we were to move the focus point further into the subject then this area becomes part of the image and the back of the image becomes sharper as the now new focal point has moved further into the image. To illustrate this here's a table top set up to illustrate the point. The first image is focused on the tyre of the model and the second a little further in. You can see that the second image has more of the image in focus as we have moved the whole depth of field zone into the picture. In practical terms set the camera to manual focus and use the "rocking" technique to find the front focus point and then just move forward slightly to take the shot!

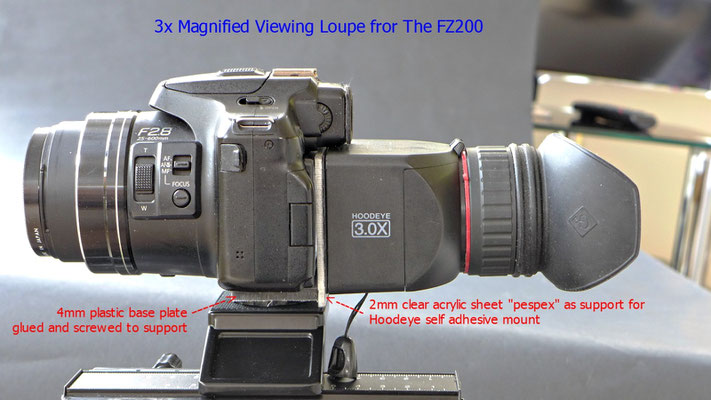

Alternative Large Format LCD Viewing Aid for the FZ200

I had a request, several weeks ago, from a subscriber wanting help with an alternative viewfinder as he had slight visual

impairment and was having difficulty using the small EVF of the FZ200. My first attempt at a solution was to use a magnifying eyepiece designed for use on Nikon cameras but this meant using a

permanent fixing of the Nikon eyepiece to the plastic surround of the FZ200 EVF. Although it did work of a fashion it wasn't a total success. I then switched to designing a rig which would hold

the Hoodeye 3.0X magnifier loupe to the LCD screen. The Hoodeye has a swing up section allowing un-magnified total area view to be seen or when swing down a magnified whole view of the screen.

Being a 1 inch eyepiece lens and large rubber eye cup this provided a very neat solution to the problem. It's in prototype stage and I think when the new HD screen of the FZ330 makes an

appearance this will be a great addition for stills photographers and videographers wanting to get a crystal clear view of what they are shooting.

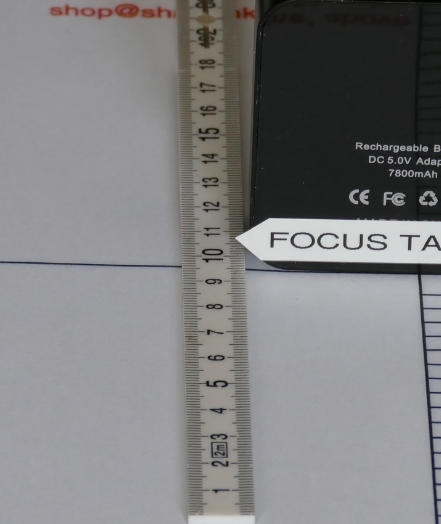

Determining FZ200 Plane of Focus

Over a couple of weeks I have had some "focus" issues with my FZ200. What I'm sure was in focus at the time of pressing the shutter button turned resulted in being out of focus when I reviewed the image.

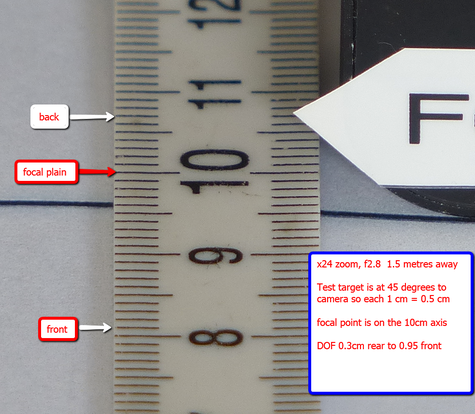

I know there is an adjustment in some DSLR's to change the back focus of the lens but as the FZ200 uses a "live view" sensor I thought that if the camera acquired focus under the target area (I use AFS with either the smallest or next largest target size) that the camera would be in perfect focus at this point. I devised a rig, similar to the one used to calibrate the back focus on a DSLR, to establish just exactly where the depth of field lay at any given focal length with the camera. Somewhere, in the back of my mind, I had a figure relating the the depth of field being 60% back and 40 % front at wide angle decreasing to almost a 50 % equal split at full telephoto zoom.

Basically a rule is placed on a horizontal surface and a focus line is drawn on the target, or as I have done here add a high contrast marker which is exactly aligned with the 10 on the scale. The camera is positioned on a tripod at 45 degrees to the target. I used my compound slide so that I could make objective measurements during the test.

I used x24 zoom and f2.8 as my starting point and set the AF point on the high contrast target. Using the 2 second timer to trigger the exposure with the OIS turned off.

As the ruler is at 45 degree to the camera axis each 1 cm becomes 0.5 cms in practical terms. I repeated the focus test 3 times to get an average value.

I used my macro slider to help me position the focus point and to allow me to make calibrated changes to the manual focus poition which was set using the side focus button to force an auto AF point

This is the test image from the conditions as set above.

As you can see there is an unequal distribution of the depth of focus when the camera is focused on the 10 mark on the scale.

So rather than the 50/50 split I was expecting it showed me this distribution more towards the front of the image. This is what I noticed when photographing my table top models. Quite often the rear of the image would be out of focus when it should have been covered by the lens DOF.

In the service procedures there is a routine to set Back Focus using a light collimator but as I don't have access to one, or the setup software, I will live with this as I now know how much bias to put into my image to get everything in focus!

What I was getting with the camera focused round about the front door seam.

As you can see the rear wheel is out of focus!

click to enlarge the images

Now using the same point for focus using the focus button on the side of the lens in Manual Focus mode I then moved the camera position 1 cm to the rear using the macro slide. As a result the whole model is now acceptably sharp!

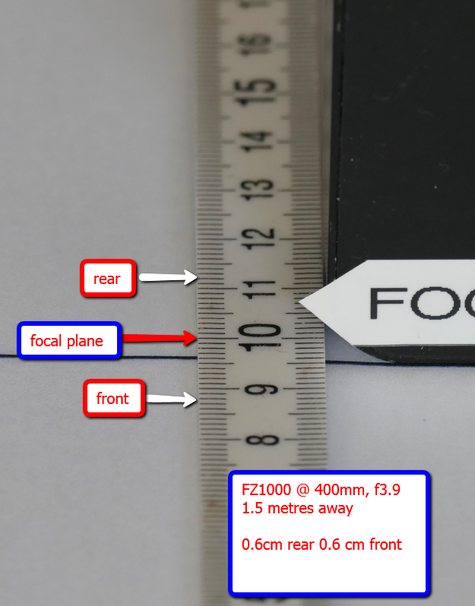

I repeated the same test with my FZ1000 and it performed as I would have expected the FZ200 to have done!

Native and Expanded ISO Ranges

With cameras like the FZ1000 there is an option to use an "expanded" ISO range. Normally they are hidden from user view as they are not considered as delivering the best image quality that would be expected in the "normal" ISO range. This expanded range has it's uses but it does have some quality issues. "Expandable" means that the change to light sensitivity is done by multiplying (or dividing) the analogue to digital convertor data output, rather than by adjusting the gain of the amplifier attached to the pixels on the sensor itself. So the exposure is set for ISO 12800, the sensor is set for ISO 6400, and the sensor results (digitised by the ADC) are multiplied by two. Noise is also amplified by two so you will se much more noise at this expanded level! At the low end of the expanded range we suffer from blown highlights. So if a subject was susceptible to this then it will be exaggerated with expanded (LO) ISO.

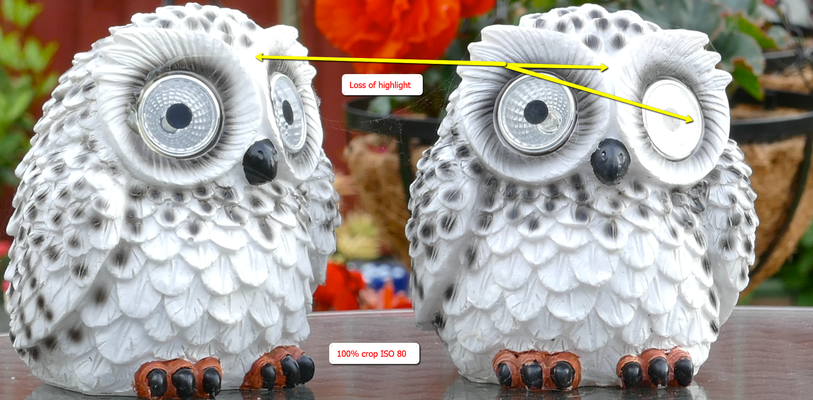

Here's an example of using the expanded Lo setting of ISO 80 compared to ISO 125 in the native ISO

range.

Flash Synchronisation

When using electronic flash with modern digital cameras it is important to understand how flash synchronisation happens.

Traditional film cameras initially used flash bulbs and then as electronic flash guns became available it was necessary to alter the way in which this firing mechanism worked.

These magnesium wire flash bulbs were manufactured in a variety of "speeds".

These speeds were based upon the time from ignition to the bulb burning at full brightness. Typical values were between 5 and 30 milliseconds.

To allow the bulb to reach full intensity before the shutter opened the flash contacts were triggered ahead of the shutter opening to allow this brightness to reach its peak. You would find the M

and F sync letters on the shutter synchronisation settings standing for the M medium speed of 30 milliseconds and F fast speed of 5 milliseconds.

When electronic flash entered the scene a new synchronisation mode had to be employed. As the electronic flash is a very brief exposure of light it is important that the shutter is fully open before the brief pulse of light is emitted by the flash unit. X synchronisation was developed to allow this to happen. However this did create some problems depending upon the type of camera you possessed. With cameras with a leaf shutter, which is typically in the lens itself, the problem didn't happen and the camera managed to synchronise at all preset shutter speeds.

When slr's were introduced with a mechanical moving blind shutter mechanism in which a roller blind would open and then a second blind would close to terminate the exposure the problems started

to manifest themselves.

There becomes a physical limit as to how fast these curtains can move. To achieve much faster shutter speeds the shutter mechanism works differently. In this situation the first and second curtain move simultaneously thus exposing the film or sensor in a band of light. The faster the shutter speed the shorter the delay between the two curtains thus creating a smaller slit to expose the film or sensor. So the image is built up as a moving band of light (an you may be familiar wit the rolling shutter effect where any subject which is moving during this traversing time appears to be distorted from top to bottom - a slanted appearance).

If we use electronic flash with this mode the very brief pulse of light happens almost immediately the curtains open and then the rest of the image receives no more flash exposure. When we look at a resulting image there is usually a black band at the top of the image.

To overcome this problem and allow the camera to still use faster than the normal flash synchronisation speed (typically 1/125 to 1/320 depending upon camera manufacturer) a process called high speed synch (HSS) was developed. This meant a new type of flash unit which was capable of being able to pulse the flash light several thousands of times per second. Of course to do this it had to be done at reduced power to allow the flash capacitor to recharge and secondly not to overheat and melt the flash tube.

Using HSS the camera behaves exactly as it would for the faster than synch speed mode, i.e. the moving slit but the flash gun fires continuously at these very high frequency pulses for the whole duration of the mechanical movement of the moving slit. Thus in this method the film or sensor is exposed by a very high frequency series of flash exposures. The compromise here is the power level at which the flash guns have to operate to allow these rapid bursts of light to occur. Typically it can be as low as 1/8th power and so flash to subject distances have to be quite close to achieve sufficient exposure from the flash light.

The relationship between the curtains opening and the flash exposure commencing becomes known as first or second curtain synchronisation.

If the flash commences with the opening of the first curtain then if there is any subject motion, typically horizontally, any ambient light will continue to provide exposure on the subject.

This leaves an image streak which looks as though it precedes the main flash image and doesn't look "right". If there is any possibility of movement and ambient light continuing to expose the image it is better to use the second curtain synchronisation method. In this method the exposure commences as normal and then the flash fires at the end of the exposure. This causes the motion blur to appear behind the subject and looks more natural.

With compact digital cameras and bridge cameras the "focal plane" shutter is not utilised. The shutter is normally in the lens and synchronisation can be at any of the available exposure shutter speed settings. It is far easier to use and doesn't require the use of a HSS enabled flash gun to create the exposures.

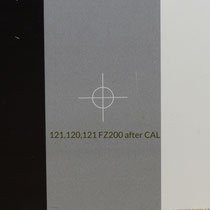

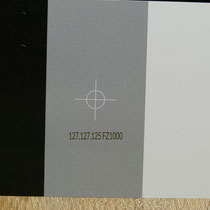

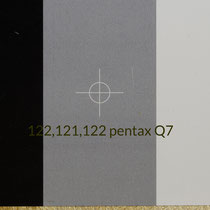

Calibrating A Panasonic Lumix Camera Auto White Balance Neutral Point

The purpose of the cameras automatic white balance is to set an operating point such that the image produced has a neutral colour balance. That is whites are white and greys are grey without a hint of any colour bias.

With my two FZ200 there is an appreciable difference in how an image is rendered from each camera given the same set up conditions.

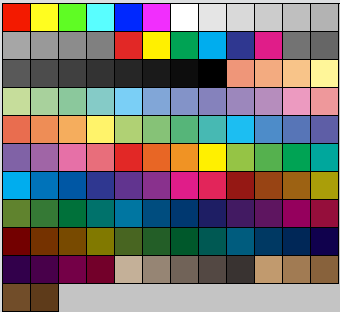

There are "tools" like the Macbeth colour patches which allow you to check/calibrate the camera or apply a colour profile to your image processing workflow such as Camera RAW or Lightroom.

I took the image, shown left, using a daylight balanced LED light panel with the camera set to its AWB mode and JPEG output

Opened in Photoshop and using the INFO tool to measure the RGB values of the grey patches shows the camera is producing an image, in this lighting condition, which has a slight cyan/blue cast as indicated by the readout. Of course how you view this on your pc screens now will depend upon how well the display is calibrated. This important factor is often overlooked when we post process our images and adjust colour balance to make the images appear neutral. If our screen display has a "bias" then we are likely to offset the image we are correcting by the opposite value to make them appear correct. If we are the only ones viewing the image on the same screen then this is fine. However if we publish the image to say a photo sharing site the image seen by other people could look entirely different!

These digitally generated colour swatches can give you an idea of how your own pc display is at the moment. The greys in these sets should be neutral with no colour cast. To see if your contrast is set correctly you should be able to distinguish the difference in the 6th, 7th and 8th dark grey patches on the third row. If they all appear to be of the same shade you contrast may be set too high or you brightness too low.

If you view the second grey patch on the camera image to the third grey patch of the digital greys you will see the offset bias!

These are the two images overlaid so you can see the difference in what the camera "defines as neutral" and actual neutral

So how do we go about setting the camera Auto White Balance neutral operating point? Well you will need a neutral grey white balance card and this isn't as easy as it sounds. You can buy 18% grey cards or sets of white balance cards but the colour accuracy of those isn't guaranteed. You need really to buy a known set of cards which have a certified tolerance for colour variation. I use one purchased from Amazon and is relatively inexpensive £12 and certified to 2% tolerance

see www.greywhitebalancecolourcard.co.uk for more details.

To begin you need to set up the chart in front of the camera and evenly lit with no surface reflections. Set up the camera at the same height as the centre of the card and try to fill the screen with the card.

Ensure you are have sufficient light intensity so you can set the camera at its lowest ISO value to keep noise down in the final image. Ensure you have AWB as the white balance preset and take the exposure.

Now you need to open this jpeg file in an image editing prgram where you cam measure the RGB values of the colour patches. In Photoshop we use the INFO tool.

Here the camera image is opened up in Photoshop Elements and the INFO tool is used to position the cursor in the patch to be measured. Set the sample size to 5x5 pixels to prevent noise from affecting the results.

Write down the values obtained from the test patch

207 214 233 in this case.

Where 207 is Red, 214 is Green and 233 is Blue.

The first step is to evaluate the change needed to correct the in balance.

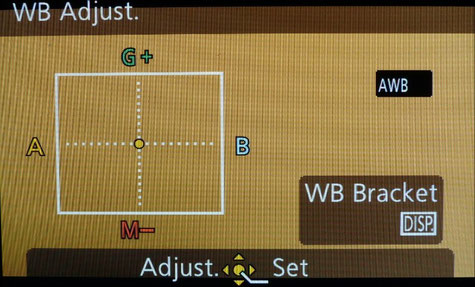

In this case Blue is too high and we correct that in camera along the Y B axis of the white balance set point of came set up.

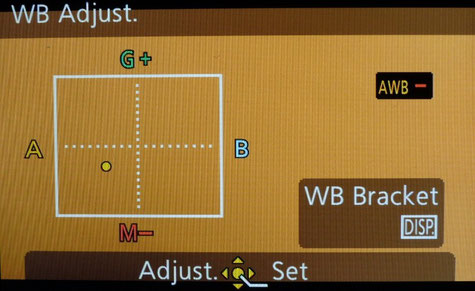

If we go to the white balance adjust screen by using the right navigation button on a selected preset we see an adjustment tool to allow us the shift the neutral point as the camera "sees" it.

The two axis represent Green/Magenta Tint on the vertical axis and illuminant colour temperature on the horizontal axis. Moving the dot to the A icon warms the image and towards the B cools it down. Between the axis quadrants we can apply addition bias to correct for any other shift. So for example if we move the spot towards the A and down towards the M we are effectively applying a Red bias and decreasing the colour temperature (making it appear warmer) Remember Higher colour temperatures are bluer like midday sky is probably 10,000K and candle flame, which we consider very warm is about 2700K.

As our image needs an increase of Yellow (the complimentary colour of Blue) to reduce the blue value we move the dot along the Y axis and also as the red was slightly low we increase its value by moving the dot into the Red sector (between A and M)

If you consider B as Cyan the the quadrant from B to M is the Blue quadrant and so if the Blue RGB value was low and not high you could increase its value by going into the Blue sector.

Once you have made the adjustment re-shoot the card and again in Photoshop examine the image to determine if any further adjustment might be needed.

I don't have a formula that converts RBG changes to the needed movement along the axis yet.

Once you have fine tuned the white balance neutral point when you measure any of the grey patches they should all have similar values as seen in the image left. Aim for no more than a difference of 10 across the 3 colours. In the example left the difference is 2.

The same adjustment can then be applied to all the other presets Sunny, cloud, shade etc) to ensure they are more accurate as well.

It would even apply the the manual white balance preset if you perform a manual white balance once you have set this point go into the WB Adjust and add the same bias as you determined in the Auto White Balance mode.

I just repeated the AWB test using 4 cameras: The Canon 5DMK3, the calibrated FZ200, The FZ1000 and the Pentax Q7. All the tests showed the cameras capable of setting a very neutral operating point - the 5D was showing only variations of 1 across the whole test square!

All the test were auto exposure at the lowest ISO and Aperture priority mode. My next test may well repeat this using an incident light meter reading and setting the cameras to manual exposure and the same values to see if the density variation is due to camera light meter calibration as the 5D is slightly darker than the others in this test.

Building a USB to 8.4v power supply to power your camera for longer periods out doors

There may be occasions when you want to use your camera for extended periods outdoors for creating videos, time lapse shooting etc.

Sometimes this is interrupted when your battery runs out!

I was asked to look at a commercially available power supply by Steve Tyldesley for the UK which supported a 1A 8.4v volt output

( http://www.amazon.co.uk/EasyAcc-20000mAh-Precise-External-Smartphones/dp/B00LHB51GO)

I then did some measurements as to what power several of my cameras were taking during power up, zooming and filming.

The FZ200 was taking just under 0.5A in the worst case and the FZ1000 0.7A. The G6 was 0.9A during recording.

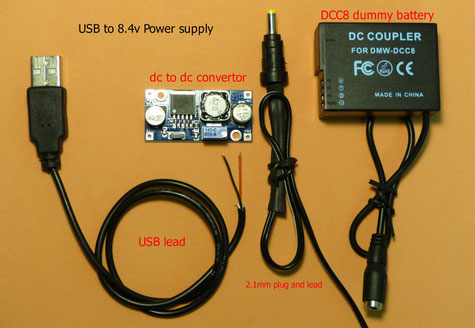

As I have several USB power banks available I decided to investigate ready made dc to dc step up convertors which would boost the 5v USB power to the 8.4v required for the camera using the dmw-DCC8 dummy battery that ships with the ac adaptors for the camera.

(http://www.amazon.co.uk/gp/product/B00EF7J7TE?creativeASIN=B00EF7J7TE&linkCode=w01&linkId=BRAAPWGB2KF5VAEN&ref_=as_sl_pc_ss_til&tag=httpwwwgrah07-21)

It was feasible to build my own power supply adaptor to use the power banks I had. This is the project build.

If you can solder the cables to the pcb board with a small tipped soldering iron and have a

digital multimeter so that you can set the output voltage of the unit then this is a really simple project to build yourself.

This is the first prototype of the project to test the operation with various cameras. Here the unit is powering my FZ1000 from my 7600mAh battery pack which is almost 7 times the capacity of the BLC12E battery at 1200mAh

For the testing I added a digital voltmeter pcb to monitor the voltage of the unit under load conditions. I also used a USB power monitor to check the current drawn from the power banks to ensure it didn't exceed either the 1A or 2A output ports that were available for use.

These are the component parts needed to complete the build

and there will be a construction video on Youtube shortly of how to make this yourself.

You will additionally need a small soldering iron and a small plastic box to fit the finished unit into.

I will provide as many links to the component parts as I can, or give you detailed description so you can source your parts locally.

The electronic mounted inside a small plastic enclosure

The completed project

The parts needed for the project

The AC power supply unit from Amazon which included the DMW-DCC8 dummy battery for the DMW-BLC12e battery used in the F200, GH2 and FZ1000 cameras

Ex-Pro® Panasonic DMW-AC8 AC Mains Power Supply Adapter & DMW-DCC8E Battery coupler kit for Panasonic Lumix SLR DMC-G5, DMC-G6, DMC-GH2, DMC-FZ200

An old USB printer cable or any USB charger cable is used to connect the power bank to the pcb

To connect the pcb to the DMW-DCC8 a 2.1mm power extension cord is used. Either a separate purchase or if you don't intend to use the mains power supply you can cut the lead off that to use with this project.

2.1mm DC Power Plug to Socket CCTV Extension Lead Cable 3m

The dc to dc convertor from Amazon or Ebay etc

DC-DC Step Up Power Apply 3V-32V to 5V-35V XL6009 400KHz 4A Max

Input Range: 3V~32V

Output Range: 5V~35V

Input Current:4A (max), no-load 18mA (5V input, 8V output, no-load is less than 18mA. Higher the voltage, the greater the load current.)

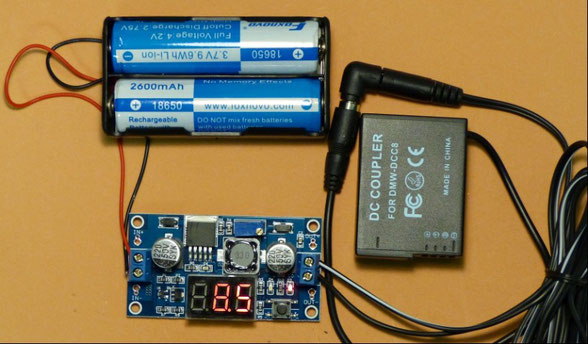

Improved version with solderless connections

This version of the dc to dc step up converter uses screw terminals and requires no soldering. It even has a built in digital voltmeter so you can set the output voltage!

For the input power rather than an USB power bank I have used two Lithium Polymer cells in series in a ready wired 18650 battery box designed for two cells in series. This gives a nominal terminal input voltage of 8.4v but the converter can handle this and outputs 8.4v regulated as the lithium cells terminal volts decrease. The unit will deliver power until the 5.5v threshold voltage is reached thus giving the full capacity of the cells. Run time will be in excess of 6 hours with the 2600mAh capacity cells used here.

The above version uses the following module

LM2577 DC-DC Adjustable Step-up Boost Power Supply Module with 3-digit Display

from Ebay, multiple sellers.

Depth of Field, something you may not appreciate.

I'm sure everyone has heard of depth of field. It is described as the range of distance that appears acceptably sharp in our image. It will vary depending on sensor size, the lens aperture and the focusing distance to the subject.

You might think that focal length should play an important role in determining the depth of field but in actuality it has little effect on the image.

I set up an experiment to show the effect of aperture and focus distance on depth of field and will show the results below.

You've probably seen the effect that closing down the aperture on a lens increases the amount of depth of field and when wide open we have the shallowest depth of field. There are two caveats to this though. If we use the lens wide open we run the risk of "Aberration" limited resolution and at fully closed "Diffraction" limited resolution. Ideally we want to be using the lens at its sweet spot if we want the sharpest images. Usually it is about 2 stops down from fully open, though will vary from lens to lens construction.